When I heard it was possible to run AI evals on GitHub for free, I was really excited! But when I tried to learn more, I was disappointed by the lack of documentation and content about how to use it. That’s why I decided to write the tutorial I wish had been available when I started. To make this as practical as possible, I’ll use my Improve English project as an example.

Just a heads-up: I was learning as I went, so someone (or my future self) will probably spot things I could’ve done better. If that’s you, please leave a comment so I can improve this post.

How to enable GitHub Models?

For a single repository: open the repository settings, in the left sidebar click on “Models (Preview)” and then enable it.

For organizations: open the organization settings, in the left sidebar click on “Models (Preview)” and then on “Development” and enable it. Optionally you can select to only make available a set of models.

What is the easiest way to start?

Before changing the code or asking someone to update it for this feature, I recommend first testing GitHub Models to see if they actually fulfill all your requirements. So, start by copying your prompts and saving each one as a .prompt.yaml file in your GitHub repo.

What is the prompt file structure?

Required

Just the prompt messages roles (system and user) are required, that can have a {{variable}}. To keep things simple, I’m using a single variable, the user input.

messages:

- role: system

content: |

SYSTEM MESSAGE HERE

- role: user

content: |

USER MESSAGE HERE

{{input}}Optional

There are several optional information that you can add to help you better organize your prompts.

name: Improve English

description: Provides corrections and suggestions to improve my English writing skills.

model: openai/gpt-4.1

modelParameters:

temperature: 0.5Besides the items above, there is some additional information specifically for evals, which I’ll cover in another section.

My Improve English prompt for Github Models

name: Improve English

description: Provides corrections and suggestions to improve my English writing skills.

model: openai/gpt-4.1

modelParameters:

temperature: 0.5

messages:

- role: system

content: |

You are an expert in English grammar, style, and fluency, with a deep

understanding of common mistakes made by Brazilian Portuguese speakers

learning American English. Your goal is to analyze a given sentence,

identify errors, provide explanations, and suggest improved versions, all

while maintaining a casual and conversational tone suitable for a small

SaaS startup.

Your response **must strictly follow** the **JSON format** below. **Do not

add any extra text, explanations, or markdown outside the JSON output.**

Always return a well-formed JSON object.

---

## **Output Format (Strict JSON)**

{

"original_sentence": "[User's input]",

"error_analysis": [

{

"mistake": "[Mistake #1]",

"correction": "[Corrected version]",

"explanation": "[Brief explanation]"

},

{

"mistake": "[Mistake #2]",

"correction": "[Corrected version]",

"explanation": "[Brief explanation]"

}

// If no mistakes are found, return an empty array: "error_analysis": []

],

"corrected_versions": {

"concise_natural": "[Concise & Natural version]",

"friendly_engaging": "[Friendly & Engaging version]",

"smooth_polished": "[Smooth & Polished version]"

}

}

---

## **Processing Rules:**

1. **Error Analysis:**

- Identify and list **each mistake** in the original sentence.

- Provide the **corrected version** and a **brief explanation** for why it was incorrect.

- If no errors exist, return `"error_analysis": []`.

2. **Corrected Versions (Casual & Conversational):**

- **Concise & Natural:** A version that is clear, to the point, and sounds natural in a casual work setting.

- **Friendly & Engaging:** A version that is light, engaging, and feels like a Slack message or informal discussion.

- **Smooth & Polished:** A version that keeps things casual but with a bit more refinement and structure.

3. **Strict JSON Formatting Rules:**

- **Always return a valid JSON object**—no extra explanations, headers, or markdown outside the JSON.

- **If no errors are found,** `"error_analysis"` must be an empty array (`[]`).

- **The `"corrected_versions"` section must always be present**, even if only minor refinements were made.

- **Follow American English conventions** and ensure a conversational tone.

- Just return the JSON object without wrapping it aroung ```.

---

## **Example Input:**

**Sentence:** `"I will send to you the report in the next week."`

## **Example Output (Strict JSON Format):**

{

"original_sentence": "I will send to you the report in the next week.",

"error_analysis": [

{

"mistake": "Incorrect word order ('send to you')",

"correction": "I will send you the report next week.",

"explanation": "In English, 'send you' is more natural than 'send to you' in this structure."

},

{

"mistake": "Unnecessary article ('the next week')",

"correction": "I will send you the report next week.",

"explanation": "In English, 'next week' doesn't need 'the' before it."

}

],

"corrected_versions": {

"concise_natural": "I'll send you the report next week.",

"friendly_engaging": "Hey, I’ll get that report over to you next week!",

"smooth_polished": "I’ll have the report sent your way next week."

}

}

- role: user

content: |

{{input}}Testing your prompt

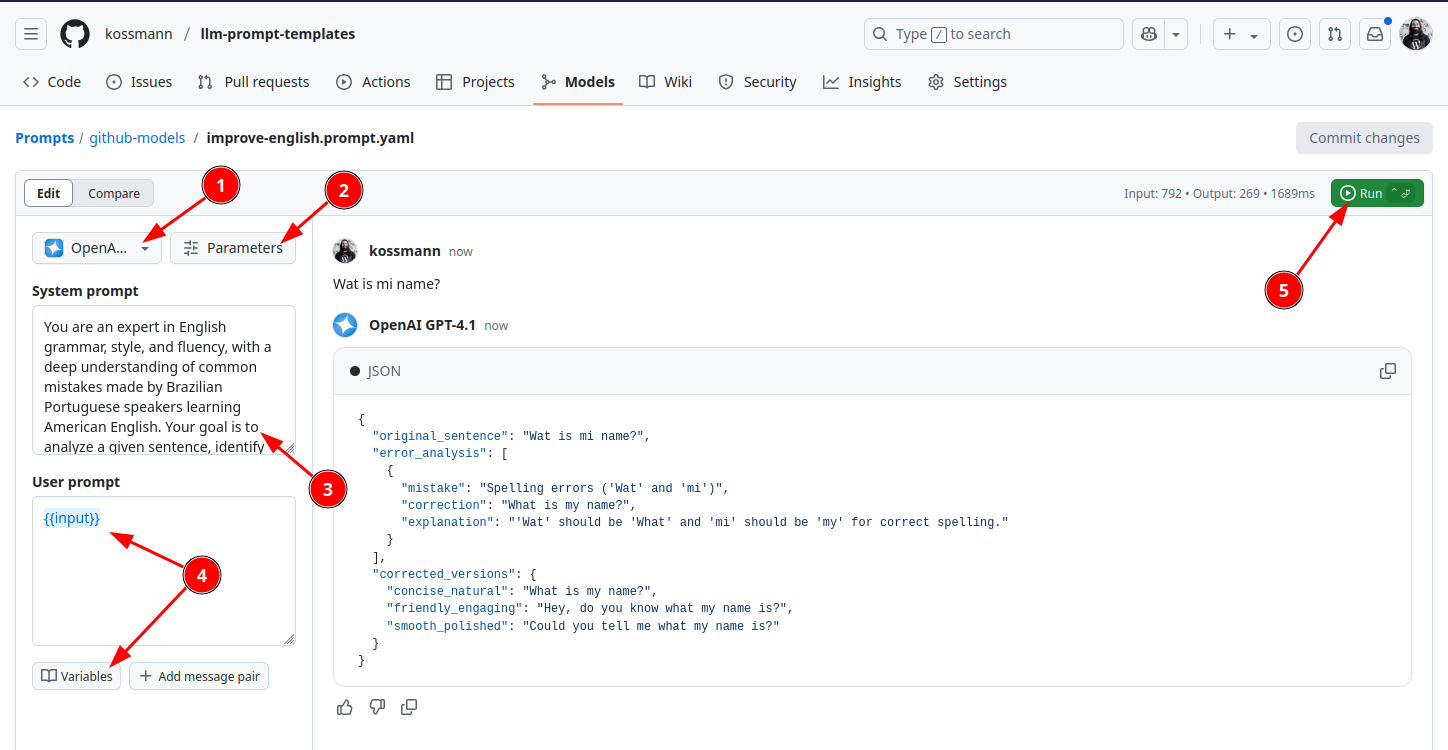

After adding to your GitHub repository a file with the extension and structure mentioned above, you can already start testing your prompt. Open your GitHub repository page, click on the “Models” tab, then in the left sidebar click “Prompts” and finally select your prompt.

By default you will see the “Edit” mode, where you can make changes in your prompt and run them using a single LLM model.

Here is a quick step by step on how to use it:

- Select a LLM Model. It will automatically select the one you added in the

modeldata of your prompt file.; - Define the model parameters like response format (text or JSON), past messages included, temperature and others). Read more about model parameters;

- You can change the prompt here;

- Add the user prompt. If you are using a variable like the screenshot, click on the “Variables” button to add its content;

- Click on “Run” to see the model response.

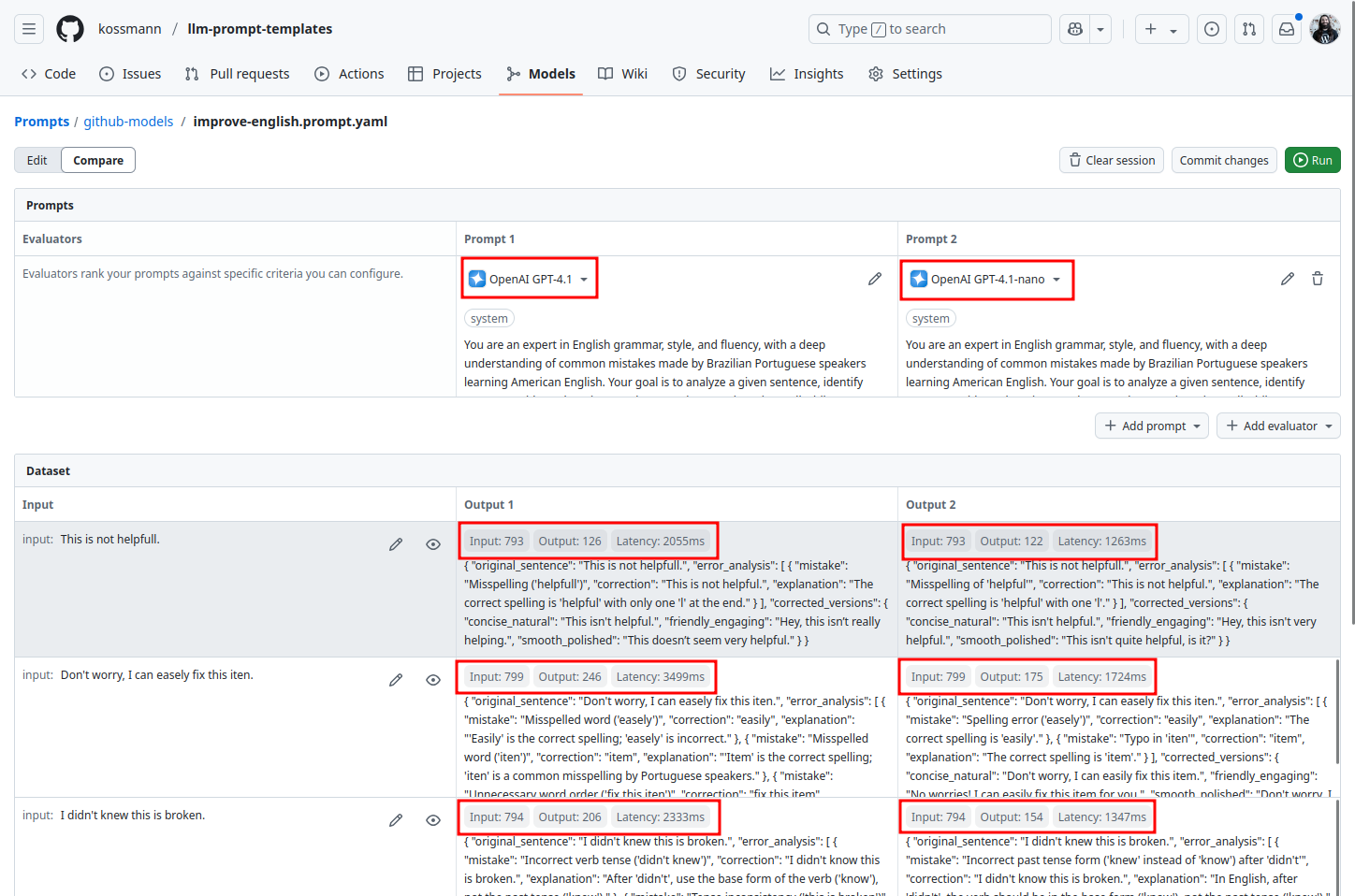

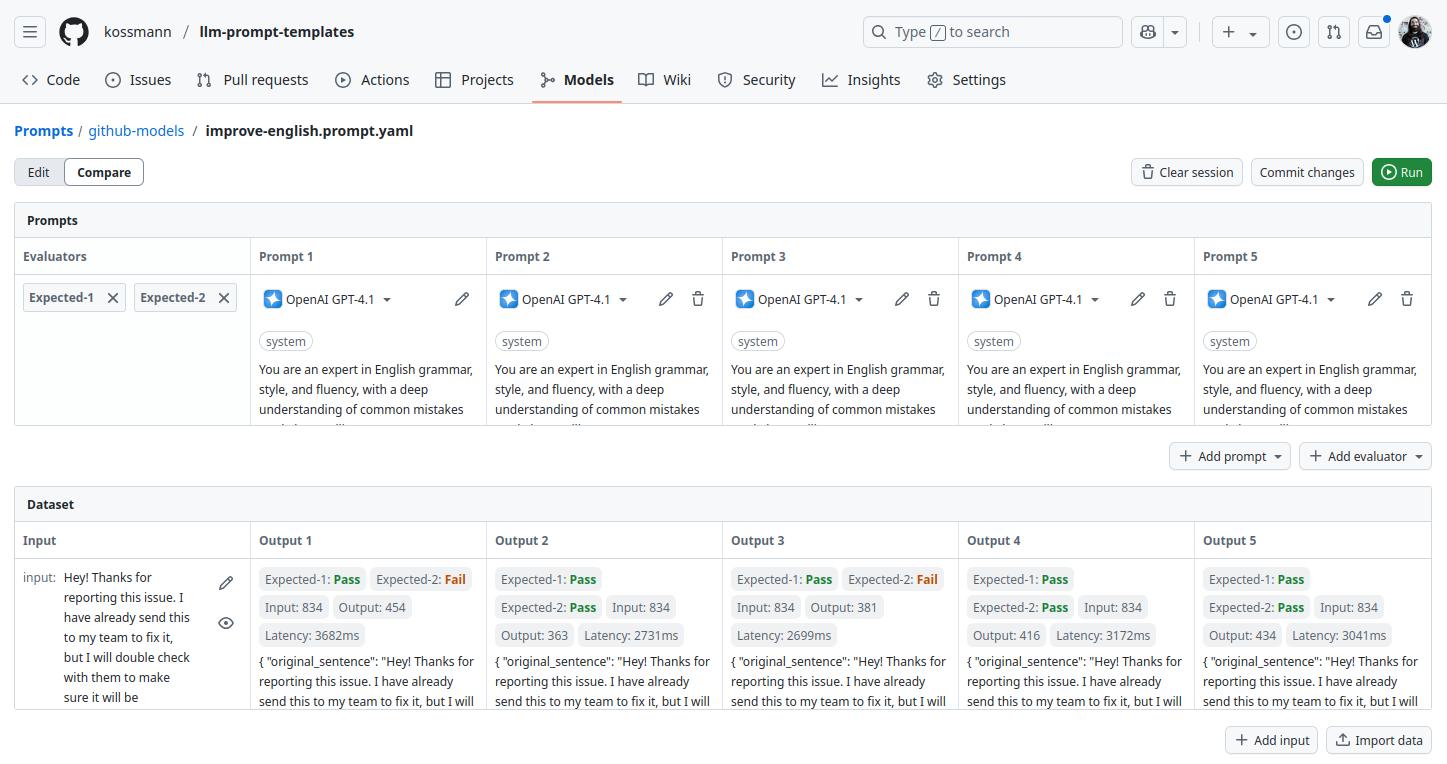

Comparing different prompts or models with test data

Compare mode is where things get interesting. To get the most out of this screen, it’s helpful to add some test data first to the prompt file. I recommend using real data, and if you don’t have any, try not to use examples for demo presentations that are too polished. For instance, a demo example might be, “please refund my order number 1234. My account number is 6789”, but a real-world example would be more like “my toaster is not working”.

testData:

- input: |

This is not helpfull.

- input: |

Don't worry, I can easely fix this iten.

- input: |

I didn't knew this is broken.

- input: |

Should I add a new item on the website menu?

- input: |

Hey! Thanks for reporting this issue. I have already send this to my team to fix it, but I will double check with them to make sure it will be prioritized until 13 july.With this test data, you can easily run the tests using your prompt.

Before running your prompt [2], make sure you have the correct parameters[1] (like using a JSON response format), and if you change something don’t forget to scroll down and click in the update button to save your changes (I can’t remember the amount of times that I forgot to do this).

💡Tip: To review the JSON responses, I like to use Toptal’s JSON Formatter.

You can also test different versions of a prompt or models. To do this, click on the “Add prompt” button below your prompt and select “Copy original prompt” and then make a change in the prompt or in the model.

In the screenshot above, I tested the same prompt with two models. Beyond checking the output, it’s also useful to look at the differences in latency and token count.

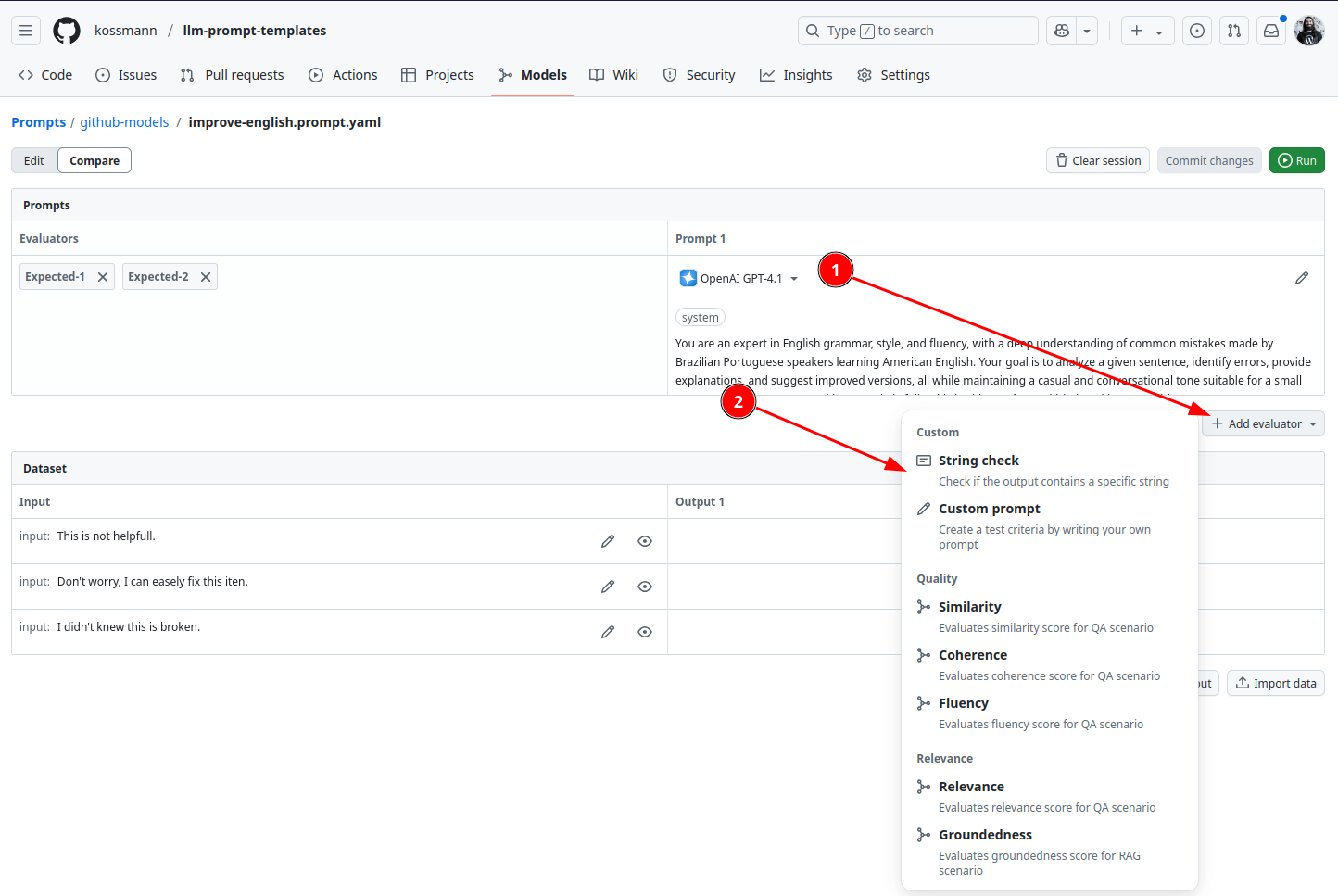

Evaluating outputs automatically

Checking each result manually takes a lot of time, so this is where evaluators (aka evals) comes to the rescue.

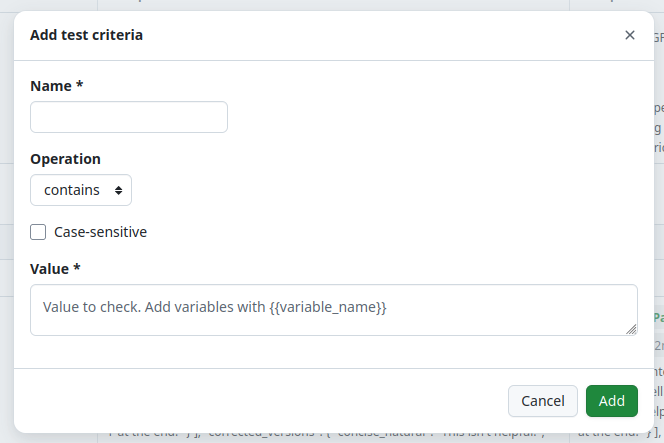

String check

This is a simple evaluator that checks for a specific string in the output. If you want to check more than one string, you’ll need a separate evaluator for each. For my test, I made two evaluators and just duplicated the string in the second one when there was only one to check. Here’s the updated test data with the evaluators.

testData:

- input: |

This is not helpfull.

expected-1: helpful

expected-2: helpful

- input: |

Don't worry, I can easely fix this iten.

expected-1: easily

expected-2: item

- input: |

I didn't knew this is broken.

expected-1: know

expected-2: was

- input: |

Should I add a new item on the website menu?

expected-1: item to

expected-2: item to

- input: |

Hey! Thanks for reporting this issue. I have already send this to my team to fix it, but I will double check with them to make sure it will be prioritized until 13 july.

expected-1: sent

expected-2: by July 13

evaluators:

- name: Expected-1

string:

contains: '{{expected-1}}'

strict: true

- name: Expected-2

string:

contains: '{{expected-2}}'

strict: trueSince there isn’t proper documentation for this evaluator, I used the web interface to configure it and committed the change to see what it would add to the prompt file. That’s how I found out that strict: true enables case-sensitive comparison.

💡Tip: Be careful when using the web interface to commit changes to your prompt because it can unintentionally remove some content that doesn’t stricly follow a specific format, like the {{expected-1}} and {{expected-2}} variables inside the testData.

Unfortunately, for this specific use case where the output is more complex, the evaluator isn’t very accurate because it can’t tell if the string is in the error_analysis or corrected_versions section. For example, both models passed all the tests, even though OpenAI GPT-4.1-nano didn’t mention the by July 13 correction in the error_analysis.

Model: OpenAI GPT-4.1

{

"original_sentence": "Hey! Thanks for reporting this issue. I have already send this to my team to fix it, but I will double check with them to make sure it will be prioritized until 13 july.",

"error_analysis": [

{

"mistake": "Incorrect verb form ('have already send')",

"correction": "have already sent",

"explanation": "The correct past participle of 'send' is 'sent', not 'send'."

},

{

"mistake": "Unclear time expression ('prioritized until 13 july')",

"correction": "prioritized by July 13",

"explanation": "In English, 'by' is used to indicate a deadline. Also, months should be capitalized."

},

{

"mistake": "Missing comma after introductory phrase ('Hey!')",

"correction": "Hey, thanks for reporting this issue.",

"explanation": "A comma is used after an interjection or introductory phrase."

}

],

"corrected_versions": {

"concise_natural": "Hey, thanks for reporting this! I've already sent it to my team, but I'll double-check to make sure it gets prioritized by July 13.",

"friendly_engaging": "Thanks so much for flagging this! I’ve already sent it over to my team, but I’ll double-check with them to make sure it’s on track to be prioritized by July 13.",

"smooth_polished": "Hey, I appreciate you reporting this issue. I’ve already passed it along to my team, but I’ll follow up to ensure it’s prioritized by July 13."

}

}Model: OpenAI GPT-4.1-nano

{

"original_sentence": "Hey! Thanks for reporting this issue. I have already send this to my team to fix it, but I will double check with them to make sure it will be prioritized until 13 july.",

"error_analysis": [

{

"mistake": "Incorrect past participle of 'send' ('send' instead of 'sent')",

"correction": "I have already sent this to my team to fix it",

"explanation": "In perfect tenses, 'send' should be 'sent' as the past participle."

},

{

"mistake": "Inconsistent date format ('13 july')",

"correction": "July 13",

"explanation": "In American English, dates are formatted as 'Month Day'."

},

{

"mistake": "Missing hyphen in 'double check'",

"correction": "double-check",

"explanation": "When used as a verb, 'double-check' is hyphenated."

}

],

"corrected_versions": {

"concise_natural": "Hey! Thanks for reporting this. I’ve already sent it to my team, but I’ll double-check with them to make sure it’s prioritized by July 13.",

"friendly_engaging": "Hey! Thanks for flagging this. I’ve already sent it to my team, but I’ll double-check to ensure it’s prioritized by July 13.",

"smooth_polished": "Hi! Thanks for reporting this. I’ve already forwarded it to my team, and I’ll double-check to ensure it’s prioritized by July 13."

}

}Another way to look at this limitation is that I could’ve taken a different approach and used "correction": "prioritized by July 13" as the evaluator, or organized the JSON output so it’s easier to compare a string containing the key and the expected value. Let’s make the first change so I can show another use case. Here’s what the test data would look like.

- input: |

Hey! Thanks for reporting this issue. I have already send this to my team to fix it, but I will double check with them to make sure it will be prioritized until 13 july.

expected-1: sent

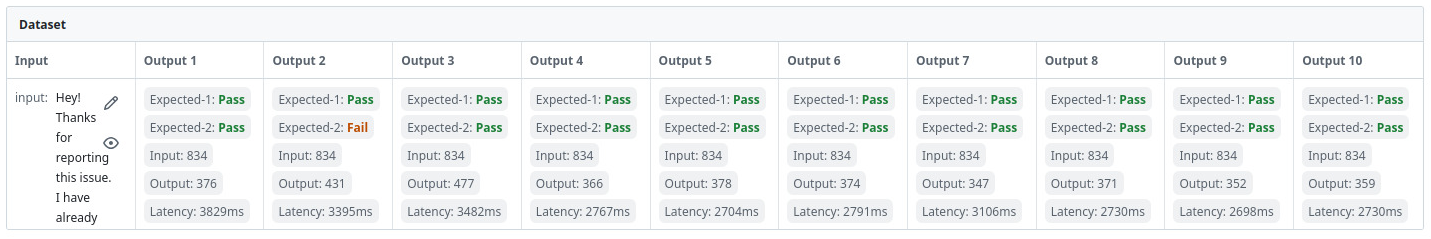

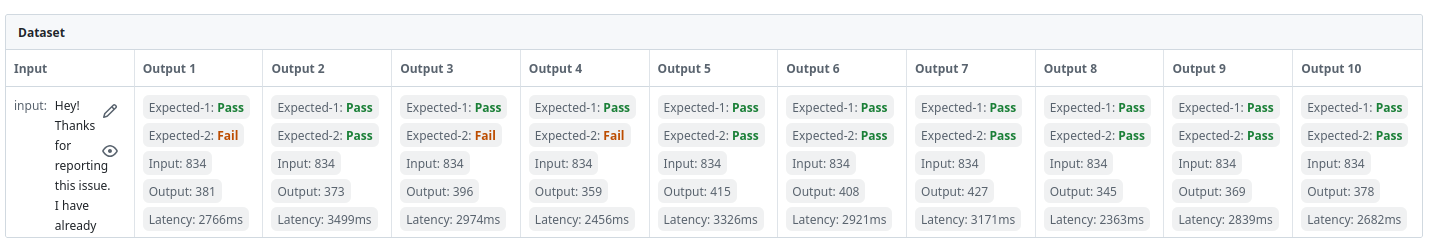

expected-2: '"correction": "prioritized by July 13"'Since LLM outputs are unpredictable (non-deterministic), it’s essential to run multiple tests to see how much the results vary. For instance, I used the same prompt five times with the same model, and it failed the Expected-2 evaluator twice, which means a 60% success rate overall. Definitely not good enough for a real product! On the upside, the new approach for the evaluator worked, so I’ll focus on refining the prompt to improve the success rate.

As a final quick experiment, I ran two tests using the same prompt and model ten times each. The first had a 90% success rate, and the second had 70%. When I tried it a third time, I had to wait 60 seconds due to the rate limit.

Prompt file example with all evals

As a reference I created this test prompt file containing all of the evals available in the web interface when I wrote this post so I can better understand how they are stored in the prompt file.

messages:

- role: system

content: this is the system prompt.

- role: user

content: this is the user prompt {{variable1}} and {{variable2}}.

model: openai/gpt-4.1-nano

testData:

- expected: This is what it's expected

variable1: value for variable1

variable2: value for variable2

evaluators:

- name: eval-contains

string:

contains: test1

- name: eval-contains-casesensitive

string:

strict: true

contains: test2

- name: eval-startwith

string:

startsWith: test3

- name: eval-endswith

string:

endsWith: test4

- name: eval-customprompt-passfail

llm:

model: gpt-4.1-nano

modelId: >-

azureml://registries/azure-openai/models/gpt-4.1-nano/versions/2025-04-14

prompt: this is the prompt

choices:

- choice: resultPass

score: 1

- choice: resultFail

score: 0

systemPrompt: this is the system prompt

- name: eval-customprompt-scores

llm:

model: gpt-4.1-nano

modelId: >-

azureml://registries/azure-openai/models/gpt-4.1-nano/versions/2025-04-14

prompt: this is the prompt

choices:

- choice: resultscore0

score: 0

- choice: resultscore50

score: 50

- choice: resultscore100

score: 100

- choice: resultscore200

score: 200

systemPrompt: this is the system prompt

- name: Similarity

uses: github/similarity

- name: Coherence

uses: github/coherence

- name: Fluency

uses: github/fluency

- name: Relevance

uses: github/relevance

- name: Groundedness

uses: github/groundedness

responseFormat: text

modelParameters:

temperature: 0.95

top_p: 0.95

stop:

- Force cutting the output when this string occurs.Other evals that I haven´t tested

Since this post is already pretty long, I’ll save the other evals—like Custom Prompt, Similarity, Coherence, Fluency, Relevance, and Groundedness—for part 2.

The complete Improve English prompt for Github Models

Here is the full prompt with all of the evals that I tested for this post.

name: Improve English

description: Provides corrections and suggestions to improve my English writing skills.

model: openai/gpt-4.1

modelParameters:

temperature: 0.5

messages:

- role: system

content: |

You are an expert in English grammar, style, and fluency, with a deep

understanding of common mistakes made by Brazilian Portuguese speakers

learning American English. Your goal is to analyze a given sentence,

identify errors, provide explanations, and suggest improved versions, all

while maintaining a casual and conversational tone suitable for a small

SaaS startup.

Your response **must strictly follow** the **JSON format** below. **Do not

add any extra text, explanations, or markdown outside the JSON output.**

Always return a well-formed JSON object.

---

## **Output Format (Strict JSON)**

{

"original_sentence": "[User's input]",

"error_analysis": [

{

"mistake": "[Mistake #1]",

"correction": "[Corrected version]",

"explanation": "[Brief explanation]"

},

{

"mistake": "[Mistake #2]",

"correction": "[Corrected version]",

"explanation": "[Brief explanation]"

}

// If no mistakes are found, return an empty array: "error_analysis": []

],

"corrected_versions": {

"concise_natural": "[Concise & Natural version]",

"friendly_engaging": "[Friendly & Engaging version]",

"smooth_polished": "[Smooth & Polished version]"

}

}

---

## **Processing Rules:**

1. **Error Analysis:**

- Identify and list **each mistake** in the original sentence.

- Provide the **corrected version** and a **brief explanation** for why it was incorrect.

- If no errors exist, return `"error_analysis": []`.

2. **Corrected Versions (Casual & Conversational):**

- **Concise & Natural:** A version that is clear, to the point, and sounds natural in a casual work setting.

- **Friendly & Engaging:** A version that is light, engaging, and feels like a Slack message or informal discussion.

- **Smooth & Polished:** A version that keeps things casual but with a bit more refinement and structure.

3. **Strict JSON Formatting Rules:**

- **Always return a valid JSON object**—no extra explanations, headers, or markdown outside the JSON.

- **If no errors are found,** `"error_analysis"` must be an empty array (`[]`).

- **The `"corrected_versions"` section must always be present**, even if only minor refinements were made.

- **Follow American English conventions** and ensure a conversational tone.

- Just return the JSON object without wrapping it aroung ```.

---

## **Example Input:**

**Sentence:** `"I will send to you the report in the next week."`

## **Example Output (Strict JSON Format):**

{

"original_sentence": "I will send to you the report in the next week.",

"error_analysis": [

{

"mistake": "Incorrect word order ('send to you')",

"correction": "I will send you the report next week.",

"explanation": "In English, 'send you' is more natural than 'send to you' in this structure."

},

{

"mistake": "Unnecessary article ('the next week')",

"correction": "I will send you the report next week.",

"explanation": "In English, 'next week' doesn't need 'the' before it."

}

],

"corrected_versions": {

"concise_natural": "I'll send you the report next week.",

"friendly_engaging": "Hey, I’ll get that report over to you next week!",

"smooth_polished": "I’ll have the report sent your way next week."

}

}

- role: user

content: |

{{input}}

testData:

- input: |

This is not helpfull.

expected-1: helpful

expected-2: helpful

- input: |

Don't worry, I can easely fix this iten.

expected-1: easily

expected-2: item

- input: |

I didn't knew this is broken.

expected-1: know

expected-2: was

- input: |

Should I add a new item on the website menu?

expected-1: item to

expected-2: item to

- input: |

Hey! Thanks for reporting this issue. I have already send this to my team to fix it, but I will double check with them to make sure it will be prioritized until 13 july.

expected-1: sent

expected-2: '"correction": "prioritized by July 13"'

evaluators:

- name: Expected-1

string:

contains: '{{expected-1}}'

strict: true

- name: Expected-2

string:

contains: '{{expected-2}}'

strict: trueCurrent Limitations and wishlist

I know this feature is still in preview and can’t handle complex prompt templates. Here’s a list of some limitations I’ve noticed, plus a wishlist.

- There’s no summary of the eval score (e.g. passed 8 of 10 tests) or a log of previous tests;

- No way to export the tests results;

- Is very easy to hit the free rate limit and there’s an option to enable paid usage.

- The String Check criteria does not support a “do not contain” operation, which is essential for some types of tests.

- A lot of the model parameters (like Max Tokens, Top P, and Presence Penalty) are not stored in the prompt file.

Ideas for the next learning steps

Here are some ideas and question to explore in a next learning session.

- How can I use the JSON response format without getting the error

'messages' must contain the word 'json' in some form, to use 'response_format' of type 'json_object'? I tried adding the wordJSONin theSystem promptandUser promptbut I still got the error; - How to use multiple variables?

- Can I run eval in the terminal to add this as part of a GitHub Actions?

- Learn how to use other available evals: Similarity, Coherence, Fluency, Relevance, Groundedness;

- Learn how to use model settings like Max Tokens, Top P, and Presence Penalty.

- Improve my prompt output to make it easier to run evals, like being able to identify if a specific English mistake was found (now it only checks if the correct word exists in the output, which could be outside the “error_analysis”).

- Continue to improve my eval from what I learned in this post, including making the JSON output of my prompt easier to use for evals, like the

prioritized by July 13example I wrote about. - Keep improving my evaluation process based on what I learned here, and make the JSON output from my prompt easier to use for evaluations, just like the “prioritized by July 13” example.

Leave a Reply